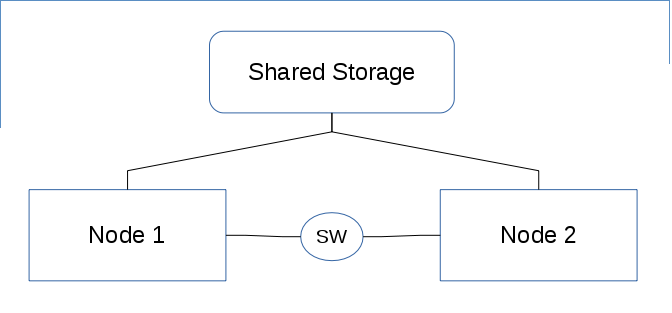

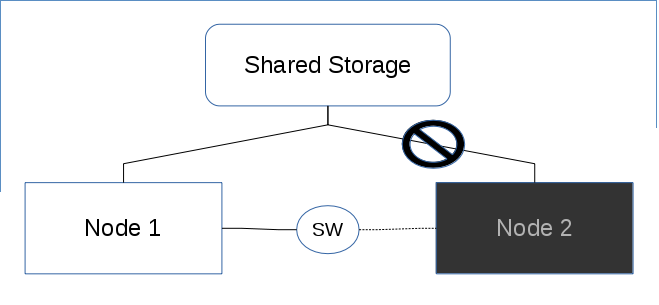

在集群中,如果一个节点出现故障,存活的节点会接管服务。但在接管服务之前,存活节点需要确定故障节点当前没有访问共享存储,fence 机制可以阻断故障节点访问存储,保证数据不被损坏。

Power fence 原理

Power fence 通常是存活的节点通过故障节点的远程控制卡(iLo, idrac 等)进行 Power off 的动作,使故障节点断电。这样故障节点就肯定访问不到共享存储了。

IO fence 原理

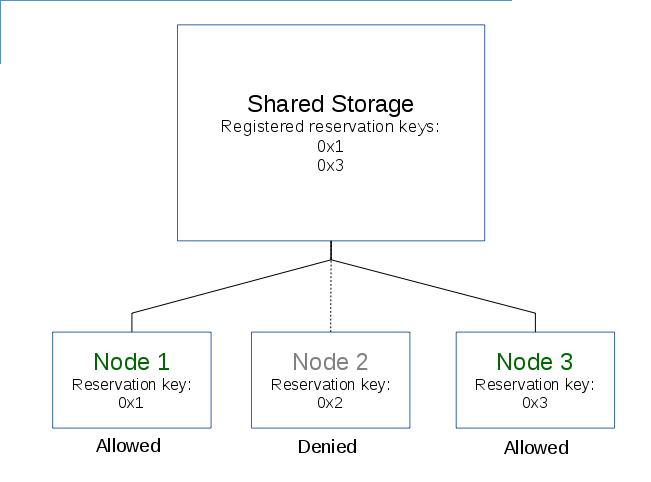

IO fence 则是在存储层面入手,阻断故障节点的访问。SCSI存储设备可以使用 SCSI Reservation Key 来达到此效果。

首先,每个节点需要定义自己的 Reservation Key,比如0x1,0x2,0x3,数字可以随机,只要保证每个节点的Key是独特的即可。在正常的情况下,每个节点需要把自己的 Reservation Key 注册到 Storage 上,方可访问存储。如果某个节点出现了故障,其他节点给存储发送指令,移除故障节点的 Reservation Key,即可阻止故障节点访问存储。

比如,节点1,2,3分别有Key 0x1,0x2,0x3.如果集群发现节点2出现故障,则给存储发送指令,移除key 0x2。这样,故障节点2就无法访问存储了。

Power fence VS IO fence

Power fence 和 IO fence 都能达到阻止故障节点访问存储。推荐使用 Power fence,IO fence 可以作为补充。当然,也可以只用 IO fence,但是会有一些限制:

- IO fence 之后,故障节点需要手动重启进行恢复。而 Power fence 则是直接电源层面重启,不需要人工干预;

- 如果故障节点使用了浮动IP(floating IP),那么如果故障是因为心跳网络断开,而该节点实际上还能联网,这个时候如果其他节点接管了这个浮动IP,则可能造成IP冲突,进而服务不可用。

fence_scsi

RHEL7 自带的 fence agents 中的 fence_scsi 可以对SCSI设备进行IO fence. fence_scsi 会为每个节点自动生成随机的 Reservation Key, 不必要手动配置。

[root@pcmk1 ~]# pcs stonith describe fence_scsi fence_scsi - Fence agent for SCSI persistent reservation fence_scsi is an I/O fencing agent that uses SCSI-3 persistent reservations to control access to shared storage devices. These devices must support SCSI-3 persistent reservations (SPC-3 or greater) as well as the "preempt-and-abort" subcommand. The fence_scsi agent works by having each node in the cluster register a unique key with the SCSI device(s). Once registered, a single node will become the reservation holder by creating a "write exclusive, registrants only" reservation on the device(s). The result is that only registered nodes may write to the device(s). When a node failure occurs, the fence_scsi agent will remove the key belonging to the failed node from the device(s). The failed node will no longer be able to write to the device(s). A manual reboot is required. Stonith options: nodename: Name of the node to be fenced. The node name is used to generate the key value used for the current operation. This option will be ignored when used with the -k option. key: Key to use for the current operation. This key should be unique to a node. For the "on" action, the key specifies the key use to register the local node. For the "off" action, this key specifies the key to be removed from the device(s). devices: List of devices to use for current operation. Devices can be comma-separated list of raw devices (eg. /dev/sdc). Each device must support SCSI-3 persistent reservations. [...]

在 Pacemaker 中,可以通过如下命令来添加 fence_scsi 资源。pcmk_host_list 中,写上所有节点的 hostname,以空格分隔。 devices中,指定所有共享存储的路径。添加完成后,fence_scsi 会自动为每个它管理的节点生成 Reservation key,并自动注册到存储上。(节点启动时,会进行"unfencing"操作,"unfencing"实际上是把key注册到存储上)

# pcs stonith create scsi fence_scsi pcmk_host_list="node1.example.com node2.example.com" pcmk_reboot_action="off" devices="/dev/mapper/disk-a, /dev/mapper/disk-b" meta provides="unfencing" --force

fence_mpath

对于 multipath 设备,fence_scsi 并不适用。如果是使用 device-mapper-multipath 进行聚合的多路径存储,可以用 fence_mpath 作为 fence agent.

它与 fence_scsi 的原理基本一致,只是配置上有所不同:

- 它需要为每个节点单独创建一个 fence_mpath 资源;

- 它需要在 fence_mpath 的配置中自行指定 key,而不会自动生成;

[root@pcmk1 ~]# pcs stonith describe fence_mpath fence_mpath - Fence agent for multipath persistent reservation fence_mpath is an I/O fencing agent that uses SCSI-3 persistent reservations to control access multipath devices. Underlying devices must support SCSI-3 persistent reservations (SPC-3 or greater) as well as the "preempt-and-abort" subcommand. The fence_mpath agent works by having a unique key for each node that has to be set in /etc/multipath.conf. Once registered, a single node will become the reservation holder by creating a "write exclusive, registrants only" reservation on the device(s). The result is that only registered nodes may write to the device(s). When a node failure occurs, the fence_mpath agent will remove the key belonging to the failed node from the device(s). The failed node will no longer be able to write to the device(s). A manual reboot is required. Stonith options: [...] key (required): Key to use for the current operation. This key should be unique to a node and have to be written in /etc/multipath.conf. For the "on" action, the key specifies the key use to register the local node. For the "off" action, this key specifies the key to be removed from the device(s). devices: List of devices to use for current operation. Devices can be comma-separated list of device-mapper multipath devices (eg. /dev/mapper/3600508b400105df70000e00000ac0000 or/dev/mapper/mpath1). Each device must support SCSI-3 persistent reservations. [...]

配置步骤:

1. 在各节点的 /etc/multipath.conf 中,定义该节点的 Reservation Key. 比如,在节点1中设置:

defaults {

user_friendly_names yes

find_multipaths yes

reservation_key 0x1

}

同理,节点2也要在 /etc/multipath.conf 中设置它特有的 reservation key,比如0x2.

2. 为每个节点创建单独的stonith资源:

# pcs stonith create pcmk1-mpath fence_mpath key=1 pcmk_host_list="pcmk1" pcmk_reboot_action="off" devices="/dev/mapper/mpatha" meta provides="unfencing" # pcs stonith create pcmk2-mpath fence_mpath key=2 pcmk_host_list="pcmk2" pcmk_reboot_action="off" devices="/dev/mapper/mpatha" meta provides="unfencing"

3. 测试节点能否正常访问存储;测试某个节点心跳断开后,还能否访问存储(期望不能).

测试验证

在按照上述方法配置 fence_mpath 之前,可以先手动测试 fence_mpath 的效果,验证存储是否支持,并观察行为是否符合预期。

1. 如果集群仍在运行,先在停止所有节点的集群服务,再进行测试。

2. 按上述方法在 /etc/multipath.conf 中,定义好每个节点的 Reservation Key.

3. 在两个节点中,尝试 unfencing 的动作,也就是把 key 注册到存储上:

[root@pcmk1 ~]# fence_mpath --devices="/dev/mapper/gfs2" --key=1 --action=on -v [root@pcmk1 ~]# fence_mpath --devices="/dev/mapper/gfs2" --key=2 --action=on -v

此时,两个节点都应该能够访问存储(比如挂载文件系统)。注意,如果是非共享文件系统,如xfs/ext3/ext4,不要同时在两个节点上挂载,否则会损坏数据。

4. 尝试 fence 其中一个节点,被fence的节点将不能访问存储.

[root@pcmk1 ~]# fence_mpath --devices="/dev/mapper/gfs2" --key=2 --action=off -v [root@pcmk2 ~]# mount /dev/mapper/gfs2 /mnt/shared_xfs_mount/ mount: mount /dev/mapper/gfs2 on /mnt/shared_xfs_mount failed: Invalid exchange

另外,可以使用如下命令查看当前有哪些key注册到共享存储上:

# mpathpersist -i -k -d /dev/mapper/gfs2

0 PR generation=0x11, 2 registered reservation keys follow:

0x1

0x1

备注:用“pcs stonith fence”命令测试可能遇到资源无法启动的bug. 目前在RHEL7.4上仍未修复。