Contents

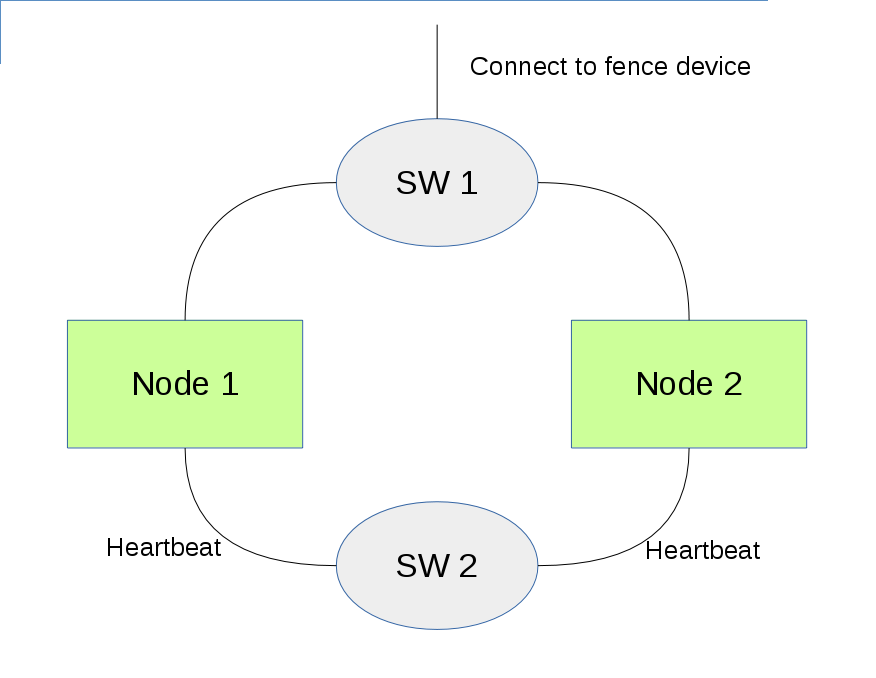

使用场景

- 在一个两节点高可用集群中,心跳和fence设备不在同一个网络;

- 如果心跳网络出现异常,两个节点则会出现分离;

- 在 Corosync 2.x 中,两节点默认(必须)开启two_node模式,在这个分离的情况下,两节点均可达到quorate的状态;

- 由于fence网络可以正常连同,两节点会互相fence对方,造成fencing race,两节点均被重启而无法提供服务;

在RHEL6/CentOS6的集群套件cman+rgmanager中,可以通过建立在共享存储之上的Quorum Disk来解决这个问题。在RHEL7.4/CentOS7.4中,Pacemaker新增了Quorum Device的功能,通过一个新增的机器作为Quorum Device,原有节点通过网络连接到Quorum Device上,由Quorum Device进行仲裁。

创建 Quorum Device

原有的两个节点保持不动,找一台新的机器搭建Quorum Device. 一个集群只能连接到一个Quorum Device,而一个Quorum Device可以被多个集群所使用。所以如果有多个集群环境,有一个Quorum Device的机器就足够为这些集群提供服务了。

在准备创建quorum device的机器上(下称quorum device host),安装所需软件包:

[root@qnetd:~]# yum install corosync-qnetd pcs

Quorum Device使用qnetd对外提供服务,qnetd会监听5403端口,需要防火墙放行。为达到测试目的,我直接禁用防火墙。

安装好软件包之后,认证pcs工具,创建 quorum device, 启动服务:

[root@qnetd ~]# echo redhat | passwd --stdin hacluster [root@qnetd ~]# pcs cluster auth qnetd Username: hacluster Password: qnetd: Authorized [root@qnetd:~]# pcs qdevice setup model net --enable --start

至此,quorum device 就创建完毕并运行了。

在已有集群中添加 Quorum Device

创建好Quorum Device,我们可以把Quorum Device加到已有集群中。

在已有的节点中,安装以下软件包:

[root@pcmk1 ~]# yum install corosync-qdevice

把Quorum Device添加到集群中(qnetd可以解析到Quorum Device Host):

[root@pcmk1 ~]# pcs cluster auth qnetd [root@pcmk1 ~]# pcs quorum device add model net host=qnetd algorithm=ffsplit Setting up qdevice certificates on nodes... pcmk2: Succeeded pcmk1: Succeeded Enablingcorosync-qdevice... pcmk1: not enablingcorosync-qdevice - corosync is not enabled pcmk2: not enablingcorosync-qdevice - corosync is not enabled Sending updated corosync.conf to nodes... pcmk2: Succeeded pcmk1: Succeeded Corosync configuration reloaded Startingcorosync-qdevice... pcmk2: corosync-qdevice started pcmk1: corosync-qdevice started

可以验证Quorum Device已经连接成功:

[root@pcmk1 ~]# pcs quorum status

Quorum information

------------------

Date: Mon Sep 18 10:36:53 2017

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 1

Ring ID: 1/1088

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate WaitForAll Qdevice

Membership information

----------------------

Nodeid Votes Qdevice Name

1 1 A,V,NMW pcmk1 (local)

2 1 A,V,NMW pcmk2

0 1 Qdevice

测试验证: qdevice与heartbeat在同一网络

如果Quorum Device跟心跳处于同一网络,那么对于两节点集群来说,它就相当于第三个节点。此时我们可以把原有的两节点看作是三节点集群中的两个节点。(Quorum device 算法为 Fifty-Fifty split)

网络配置如下:

# /etc/hosts ## Netmask 10.72.38.x/26 ## fence 10.72.36.177 pcmk1-out 10.72.37.144 pcmk2-out 10.72.37.26 qnetd-out ## For heartbeat 10.72.38.130 pcmk1 10.72.38.131 pcmk2 10.72.38.132 qnetd

[root@qnetd ~]# pcs qdevice status net --full

QNetd address: *:5403

TLS: Supported (client certificate required)

Connected clients: 2

Connected clusters: 1

Maximum send/receive size: 32768/32768 bytes

Cluster "mycluster":

Algorithm: Fifty-Fifty split

Tie-breaker: Node with lowest node ID

Node ID 1:

Client address: ::ffff:10.72.38.130:37610

HB interval: 8000ms

Configured node list: 1, 2

Ring ID: 1.8

Membership node list: 1, 2

TLS active: Yes (client certificate verified)

Vote: ACK (ACK)

Node ID 2:

Client address: ::ffff:10.72.38.131:47658

HB interval: 8000ms

Configured node list: 1, 2

Ring ID: 1.8

Membership node list: 1, 2

TLS active: Yes (client certificate verified)

Vote: No change (ACK)

1. 如果断开 pcmk1 节点的心跳网络(在VM Host上将对应网卡设置为 link down),pcmk1 会达不到 quorate 而被 pcmk2 fence.

#### pcmk1 is no quorate #### Sep 20 10:58:35 [11400] pcmk1 pengine: warning: cluster_status: Fencing and resource management disabled due to lack of quorum Sep 20 10:58:35 [11400] pcmk1 pengine: info: determine_online_status_fencing: Node pcmk1 is active Sep 20 10:58:35 [11400] pcmk1 pengine: info: determine_online_status: Node pcmk1 is online Sep 20 10:58:35 [11400] pcmk1 pengine: warning: pe_fence_node: Node pcmk2 is unclean because the node is no longer part of the cluster Sep 20 10:58:35 [11400] pcmk1 pengine: warning: determine_online_status: Node pcmk2 is unclean Sep 20 10:58:35 [11400] pcmk1 pengine: info: unpack_node_loop: Node 1 is already processed Sep 20 10:58:35 [11400] pcmk1 pengine: info: unpack_node_loop: Node 2 is already processed Sep 20 10:58:35 [11400] pcmk1 pengine: info: unpack_node_loop: Node 1 is already processed Sep 20 10:58:35 [11400] pcmk1 pengine: info: unpack_node_loop: Node 2 is already processed Sep 20 10:58:35 [11400] pcmk1 pengine: info: common_print: fence_pcmk1 (stonith:fence_rhevm): Started pcmk1 Sep 20 10:58:35 [11400] pcmk1 pengine: info: common_print: fence_pcmk2 (stonith:fence_rhevm): Started pcmk2 (UNCLEAN) Sep 20 10:58:35 [11400] pcmk1 pengine: warning: custom_action: Action fence_pcmk2_stop_0 on pcmk2 is unrunnable (offline) Sep 20 10:58:35 [11400] pcmk1 pengine: warning: stage6: Node pcmk2 is unclean! Sep 20 10:58:35 [11400] pcmk1 pengine: notice: stage6: Cannot fence unclean nodes until quorum is attained (or no-quorum-policy is set to ignore) Sep 20 10:58:35 [11400] pcmk1 pengine: notice: LogActions: Stop fence_pcmk1 (Started pcmk1) Sep 20 10:58:35 [11400] pcmk1 pengine: notice: LogActions: Stop fence_pcmk2 (Started pcmk2 - blocked) #### pcmk2 is quorate and fence pcmk1 #### Sep 20 10:58:46 [1475] pcmk2 pengine: warning: determine_online_status: Node pcmk1 is unclean Sep 20 10:58:46 [1475] pcmk2 pengine: info: determine_online_status_fencing: Node pcmk2 is active Sep 20 10:58:46 [1475] pcmk2 pengine: info: determine_online_status: Node pcmk2 is online Sep 20 10:58:46 [1476] pcmk2 crmd: notice: te_fence_node: Requesting fencing (reboot) of node pcmk1 | action=9 timeout=60000 Sep 20 10:58:53 [1472] pcmk2 stonith-ng: notice: log_operation: Operation 'reboot' [1612] (call 2 from crmd.1476) for host 'pcmk1' with device 'fence_pcmk1' returned: 0 (OK) Sep 20 10:58:53 [1472] pcmk2 stonith-ng: notice: remote_op_done: Operation reboot of pcmk1 by pcmk2 for crmd.1476@pcmk2.123eaed4: OK #### quorum device notice pcmk1 is disconnected #### Sep 20 10:58:43 qnetd corosync-qnetd: Sep 20 10:58:43 warning Client ::ffff:10.72.38.130:37610 doesn't sent any message during 20000ms. Disconnecting

2. 同理,如果断开 pcmk2 节点的心跳网络(在VM Host上将对应网卡设置为 link down),pcmk2 会达不到 quorate 而被 pcmk1 fence.

#### pcmk1 is quorate and fence pcmk2 #### Sep 20 11:14:33 [1363] pcmk1 stonith-ng: notice: log_operation: Operation 'reboot' [2065] (call 2 from crmd.1367) for host 'pcmk2' with device 'fence_pcmk2' returned: 0 (OK) Sep 20 11:14:33 [1363] pcmk1 stonith-ng: notice: remote_op_done: Operation reboot of pcmk2 by pcmk1 for crmd.1367@pcmk1.53f3ee06: OK #### pcmk2 is no quorate #### Sep 20 11:14:08 [1475] pcmk2 pengine: warning: determine_online_status: Node pcmk1 is unclean Sep 20 11:14:08 [1475] pcmk2 pengine: info: determine_online_status_fencing: Node pcmk2 is active Sep 20 11:14:08 [1475] pcmk2 pengine: info: determine_online_status: Node pcmk2 is online Sep 20 11:14:08 [1475] pcmk2 pengine: info: common_print: fence_pcmk1 (stonith:fence_rhevm): Started pcmk2 Sep 20 11:14:08 [1475] pcmk2 pengine: info: common_print: fence_pcmk2 (stonith:fence_rhevm): Started pcmk1 (UNCLEAN) Sep 20 11:14:08 [1475] pcmk2 pengine: info: common_print: test-res1 (ocf::heartbeat:Dummy): Started pcmk1 (UNCLEAN) Sep 20 11:14:08 [1475] pcmk2 pengine: warning: custom_action: Action fence_pcmk2_stop_0 on pcmk1 is unrunnable (offline) Sep 20 11:14:08 [1475] pcmk2 pengine: warning: custom_action: Action test-res1_stop_0 on pcmk1 is unrunnable (offline) Sep 20 11:14:08 [1475] pcmk2 pengine: warning: stage6: Node pcmk1 is unclean! Sep 20 11:14:08 [1475] pcmk2 pengine: notice: stage6: Cannot fence unclean nodes until quorum is attained (or no-quorum-policy is set to ignore) #### quorum device notice pcmk2 is disconnected #### Sep 20 11:14:13 qnetd corosync-qnetd: Sep 20 11:14:13 warning Client ::ffff:10.72.38.131:48912 doesn't sent any message during 20000ms. Disconnecting

3. 如果断开 qnetd 的网络,两节点集群仍然能正常提供服务。

Sep 20 13:32:05 qnetd corosync-qnetd: Sep 20 13:32:05 warning Client ::ffff:10.72.38.130:58192 doesn't sent any message during 20000ms. Disconnecting

Sep 20 13:32:25 qnetd corosync-qnetd: Sep 20 13:32:25 warning Client ::ffff:10.72.38.131:40596 doesn't sent any message during 20000ms. Disconnecting

[root@pcmk2 ~]# pcs quorum status

Quorum information

------------------

Date: Wed Sep 20 13:33:33 2017

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 2

Ring ID: 1/32

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 2

Quorum: 2

Flags: Quorate Qdevice

Membership information

----------------------

Nodeid Votes Qdevice Name

1 1 A,NV,NMW pcmk1

2 1 A,NV,NMW pcmk2 (local)

0 0 Qdevice (votes 1)

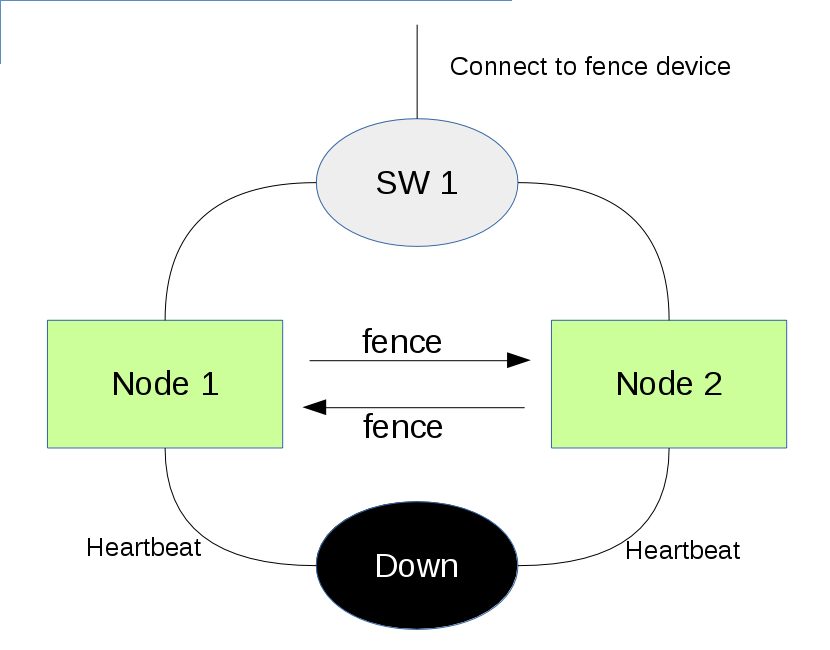

测试验证: qdevice与heartbeat在不同网络

如果Quorum Device跟心跳处于不同网络,那么当两节点之间的心跳出现异常,两节点出现隔离时,Quorum Device可以起到仲裁作用。默认它的auto_tie_breaker会使得节点序号小的节点达到quorate,使之能够提供服务。节点序号大的节点则会被fence.

网络情况如下:

## Netmask 10.72.38.x/26 ## For fence 10.72.36.177 pcmk1-out 10.72.37.144 pcmk2-out 10.72.37.26 qnetd-out ## For heartbeat 10.72.38.130 pcmk1 10.72.38.131 pcmk2 ## For quorum device 10.72.38.202 qnetd

[root@qnetd ~]# pcs qdevice status net

QNetd address: *:5403

TLS: Supported (client certificate required)

Connected clients: 2

Connected clusters: 1

Cluster "mycluster":

Algorithm: Fifty-Fifty split

Tie-breaker: Node with lowest node ID

Node ID 2:

Client address: ::ffff:10.72.38.201:42618

Configured node list: 1, 2

Membership node list: 1, 2

Vote: ACK (ACK)

Node ID 1:

Client address: ::ffff:10.72.38.200:60488

Configured node list: 1, 2

Membership node list: 1, 2

Vote: No change (ACK)

1. 断开 pcmk1 的网络, pcmk1 能达到 quorate, pcmk2 被 fence.

Sep 20 15:19:13 [13312] pcmk1 stonith-ng: notice: log_operation: Operation 'reboot' [13770] (call 2 from crmd.13316) for host 'pcmk2' with device 'fence_pcmk2' returned: 0 (OK) Sep 20 15:18:58 [7944] pcmk2 pengine: warning: stage6: Node pcmk1 is unclean! Sep 20 15:18:58 [7944] pcmk2 pengine: notice: stage6: Cannot fence unclean nodes until quorum is attained (or no-quorum-policy is set to ignore) Sep 20 15:19:19 qnetd corosync-qnetd: Sep 20 15:19:19 warning Client ::ffff:10.72.38.201:42618 doesn't sent any message during 20000ms. Disconnecting

2. 断开 pcmk2 的网络,还是 pcmk1 达到 quorate, pcmk2 被 fence.

Sep 20 16:05:49 [13312] pcmk1 stonith-ng: notice: log_operation: Operation 'reboot' [16581] (call 3 from crmd.13316) for host 'pcmk2' with device 'fence_pcmk2' returned: 0 (OK) [2282] pcmk2 corosyncnotice [QUORUM] This node is within the non-primary component and will NOT provide any services. [2282] pcmk2 corosyncnotice [QUORUM] Members[1]: 2 [2282] pcmk2 corosyncnotice [MAIN ] Completed service synchronization, ready to provide service.

参考文档

[1] 10.5. QUORUM DEVICES - HIGH AVAILABILITY ADD-ON REFERENCE

https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html-single/High_Availability_Add-On_Reference/index.html#s1-quorumdev-HAAR